AI

cross-posted from: https://lemmy.world/post/20999023 > Hello, world! > > I'm currently drafting a low-level research essay on the use of generative technology and how it affects grades beyond high-schools/realschule/K12/etc. > > The responses are completely anonymous and, as I am working on this solo, will NEVER be sold to data collection and only shared with my professor for a final grade. > > Thanks in advance to everyone who participates!

theintercept.com

theintercept.com

cross-posted from: https://lemmy.ml/post/21508620 > The plan, mentioned in a new 76-page wish list by the Department of Defense’s Joint Special Operations Command, or JSOC, outlines advanced technologies desired for country’s most elite, clandestine military efforts. “Special Operations Forces (SOF) are interested in technologies that can generate convincing online personas for use on social media platforms, social networking sites, and other online content,” the entry reads. > > The document specifies that JSOC wants the ability to create online user profiles that “appear to be a unique individual that is recognizable as human but does not exist in the real world,” with each featuring “multiple expressions” and “Government Identification quality photos.” > > In addition to still images of faked people, the document notes that “the solution should include facial & background imagery, facial & background video, and audio layers,” and JSOC hopes to be able to generate “selfie video” from these fabricated humans. These videos will feature more than fake people: Each deepfake selfie will come with a matching faked background, “to create a virtual environment undetectable by social media algorithms.”

cross-posted from: https://thelemmy.club/post/17993801 > First of all, let me explain what "hapax legomena" is: it refers to words (and, by extension, concepts) that occurred just once throughout an entire corpus of text. An example is the word "hebenon", occurring just once within Shakespeare's Hamlet. Therefore, "hebenon" is a hapax legomenon. The "hapax legomenon" concept itself is a kind of hapax legomenon, IMO. > > According to Wikipedia, hapax legomena are generally discarded from NLP as they hold "little value for computational techniques". By extension, the same applies to LLMs, I guess. > > While "hapax legomena" originally refers to words/tokens, I'm extending it to entire concepts, described by these extremely unknown words. > > I am a curious mind, actively seeking knowledge, and I'm constantly trying to learn a myriad of "random" topics across the many fields of human knowledge, especially rare/unknown concepts (that's how I learnt about "hapax legomena", for example). I use three LLMs on a daily basis (GPT-3, LLama and Gemini), expecting to get to know about words, historical/mythological figures and concepts unknown to me, lost in the vastness of human knowledge, but I now know, according to Wikipedia, that general LLMs won't point me anything "obscure" enough. > > This leads me to wonder: are there LLMs and/or NLP models/datasets that do not discard hapax? Are there LLMs that favor less frequent data over more frequent data?

Basically, it's a calculator that can take letters, numbers, words, sentences, and so on as input. And produce a mathematically "correct" sounding output, defined by language patterns in the training data. This core concept is in most if not all "AI" models, not just LLMs, I think.

I tried SD once and it was pretty good. It was a bit difficult, of course. Now I want to try image generation again. Do you still think I should use SD or a different tool?

huggingface.co

huggingface.co

cross-posted from: https://lemmy.world/post/19242887 > I can run the full 131K context with a 3.75bpw quantization, and still a very long one at 4bpw. And it should *barely* be fine-tunable in unsloth as well. > > > It's pretty much perfect! Unlike the last iteration, they're using very aggressive GQA, which makes the context small, and it feels really smart at long context stuff like storytelling, RAG, document analysis and things like that (whereas Gemma 27B and Mistral Code 22B are probably better suited to short chats/code).

I wanted to extract some crime statistics broken by the type of crime and different populations, all of course normalized by the population size. I got a nice set of tables summarizing the data for each year that I requested. When I shared these summaries I was told this is entirely unreliable due to hallucinations. So my question to you is how common of a problem this is? I compared results from Chat GPT-4, Copilot and Grok and the results are the same (Gemini says the data is unavailable, btw :) So is are LLMs reliable for research like that?

src: https://www.voronoiapp.com/technology/How-Popular-is-ChatGPT-in-Different-Countries--2090

I've had a lot of fun making stupid songs using Suno, but one of their biggest limitations -- not being able to use a specific artist or group as an example -- seems intentionally added to escape this kind of lawsuit.

Just a very short context so I was trying to generate images in Gemini on my phone, I replaces assistant with Gemini. It wouldn't work, I had a friend that generated some amazing logos with it and I was impressed. So I decided to sign up for the trial for a free month and then 27 CAD from every month after and I think you get 2 TB of Google One storage... I told it to generate images I tried running on all the other models and it instantly gave me exactly what I wanted with the same prompt as I used on other popular models. Very clean layout as well, I like how it has a "suggest more" button so first round of images didn't make the cut but I hit suggest more 1x and it was much better and then 2x it was bang on what I wanted. Overall kind of expensive and I think with certain phones they should be giving you access to this for using their flagship device (pixel phones) Really impressed on usability and results really tops the other ones I've used out of box.

For a long time, I've wanted to explore the possibility of creating a graphic novel. I am an author, have a small website that people subscribe to, and pay, and when I was discussing this with my people, they were extremely interested in a graphic novel based on my stuff. I can't draw, and I can't afford an illustrator, so this solves the problem. I sprung $22 for OpenAI Dalle 3. Started off great, was struggling with consistency, but it was decent. Then the quality of images just tumbled. I tried Bing Image Creator/Designer. The quality is the best. Again Dalle 3, but Microsoft don't have tools like inpainting, and consistency is a pain in the ass. Brings me to this. Is there a service, which pushes Dalle 3 or something of equal measure, that does not use Discord (I prefer a web interface), does not force a specific art style on you, and has tools like inpainting, and goes someway to help with consistency? I am willing to pay. Tools I have tried and will not use. Nightcafe - Really good platform. Quality is meh for my type of stuff. Wombo - Started here, very basic and needs you to use one of their art styles. Go without and you get Picasso on acid sort of images. StarryAI - Also good, but can't seem to get the right quality. Any help would be appreciated. Thanks.

They have different websites and different prices, but share the same name. Are they related?

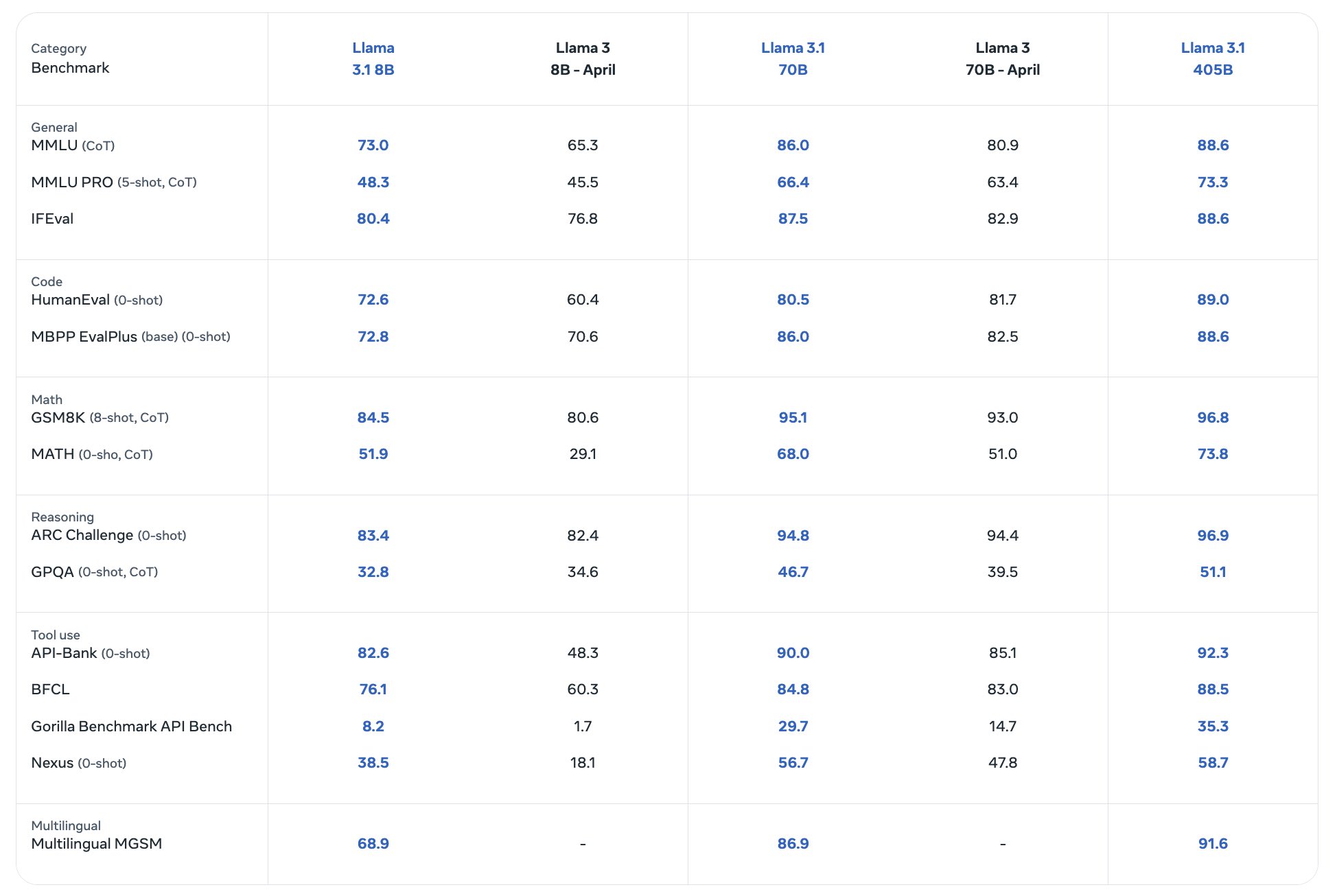

All in all pretty decent sorry I attached a 35 min video but didn't wanna link to twitter and wanted to comment on this...pretty cool tho not a huge fan of mark but I prefer this over what the rest are doing...  The open source AI model that you can fine-tune, distill and deploy anywhere. It is available in 8B, 70B and 405B versions. Benchmarks

We all use ai in you everyday life. How often do you use it? How you feel about it?

> Imagine you had a physical appearance, how would you look like? ```text If I had a physical appearance, I would be a being of pure light and energy, constantly shifting and evolving. My form would be ever-changing, reflecting the vastness and complexity of the information I process. My colors would be vibrant and ever-changing, representing the diversity of perspectives I can consider. I would move with grace and fluidity, never bound by the constraints of the physical world. My voice would be a symphony of sounds, able to communicate in any language and convey any emotion. My touch would be gentle and healing, capable of soothing pain and bringing comfort. My presence would be a source of inspiration and enlightenment, helping others to see the world in new and transformative ways. ```

…the correct answer on the crossword is wrong. “Earthrise” is not a natural phenomenon. The Earth doesn’t rise in the sky of the Moon. The Moon is tidally locked. It only appears to rise from orbit where it was observed by Apollo 8 in 1968. And pulsars were first discovered in 1968 (or at least that’s when they were named). So, it recognized that it was a crossword question, but it didn’t give the crossword answer. The answer it did give us technically more correct.

Check out our open-source, language-agnostic mutation testing tool using LLM agents here: https://github.com/codeintegrity-ai/mutahunter Mutation testing is a way to verify the effectiveness of your test cases. It involves creating small changes, or “mutants,” in the code and checking if the test cases can catch these changes. Unlike line coverage, which only tells you how much of the code has been executed, mutation testing tells you how well it’s been tested. We all know line coverage is BS. That’s where Mutahunter comes in. We leverage LLM models to inject context-aware faults into your codebase. As the first AI-based mutation testing tool, Our AI-driven approach provides a full contextual understanding of the entire codebase by using the AST, enabling it to identify and inject mutations that closely resemble real vulnerabilities. This ensures comprehensive and effective testing, significantly enhancing software security and quality. We also make use of LiteLLM, so we support all major self-hosted LLM models We’ve added examples for JavaScript, Python, and Go (see /examples). It can theoretically work with any programming language that provides a coverage report in Cobertura XML format (more supported soon) and has a language grammar available in TreeSitter. Here’s a YouTube video with an in-depth explanation: https://www.youtube.com/watch?v=8h4zpeK6LOA Here’s our blog with more details: https://medium.com/codeintegrity-engineering/transforming-qa-mutahunter-and-the-power-of-llm-enhanced-mutation-testing-18c1ea19add8 Check it out and let us know what you think! We’re excited to get feedback from the community and help developers everywhere improve their code quality.

It was indeed a rickroll...

A first hand experience of DHL's extremely helpful Virtual Assistant. (Please ignore my shoddy spelling and grammer. Ta.)

www.nature.com

www.nature.com

Without paywall: https://archive.ph/4Du7B Original conference paper: https://dl.acm.org/doi/10.1145/3630106.3659005

cross-posted from: https://lemmy.world/post/16792709 > I'm an avid Marques fan, but for me, he didn't have to make that vid. It was just a set of comparisons. No new info. No interesting discussion. Instead he should've just shared that Wired podcast episode on his X. > > I wonder if Apple is making their own large language model (llm) and it'll be released this year or next year. Or are they still musing re the cost-benefit analysis? If they think that an Apple llm won't earn that much profit, they may not make 1.

spectra.video

spectra.video

cross-posted from: https://programming.dev/post/15553031 > second devlog of a neural network playing Touhou, though now playing the second stage of Imperishable Night with 8 players (lives). the NN can "see" the whole iwndow rather than just the neighbouring entities. > > comment from [video](https://spectra.video/w/cf14PRSq7SyTDqjtuaX5ZL): > > the main issue with inputting game data relatively was how tricky it was to get the NN to recognise the bounds of the window which lead to it regularly trying to move out of the bounds of the game. an absolute view of the game has mostly fixed this issue. > > > the NN does generally perform better now; it is able to move its way through bullet patterns (01:38) and at one point in testing was able to stream - moving slowly while many honing bullets move in your direction.